In a recent post on LinkedIn Will Thalheimer asked:

How often does YOUR ORG use ONE or more LEARNER SURVEY questions related to DEI [Diversity, Equity, Inclusion]?

And Arnold Horshack took up residence in my head until I was able to post a quick reply.

https://youtu.be/ju-lptJweTc

I do that, I said.

Now I feel like I need to explain what I do and maybe provide a bit of a backstory to explain why. (Sigh, damn you Horshack.)

This story begins back in 2003 or 4. I was working for a non-profit society and the Executive Director at the time was working on his Ph.D. Part of his process included a study of Cynefin, complexity, and systems thinking. He was generous enough to share some of his learning and that grew within me a deep interest in sensemaking and narrative inquiry. I even read an early chapter of Bramble Bushes in a Thicket – Narrative and Intangibles of learning networks (Kurtz, Snowden). At that time I only appreciated understood a few points. I did understand enough to know that 1. It was important, and 2. I needed to go back to school, which I did… eventually. This along with my take on the No Limits to Learning report by Club of Rome, were seeds that grew and eventually wound through everything I thought about.. everything…Becoming

Feedback Informed

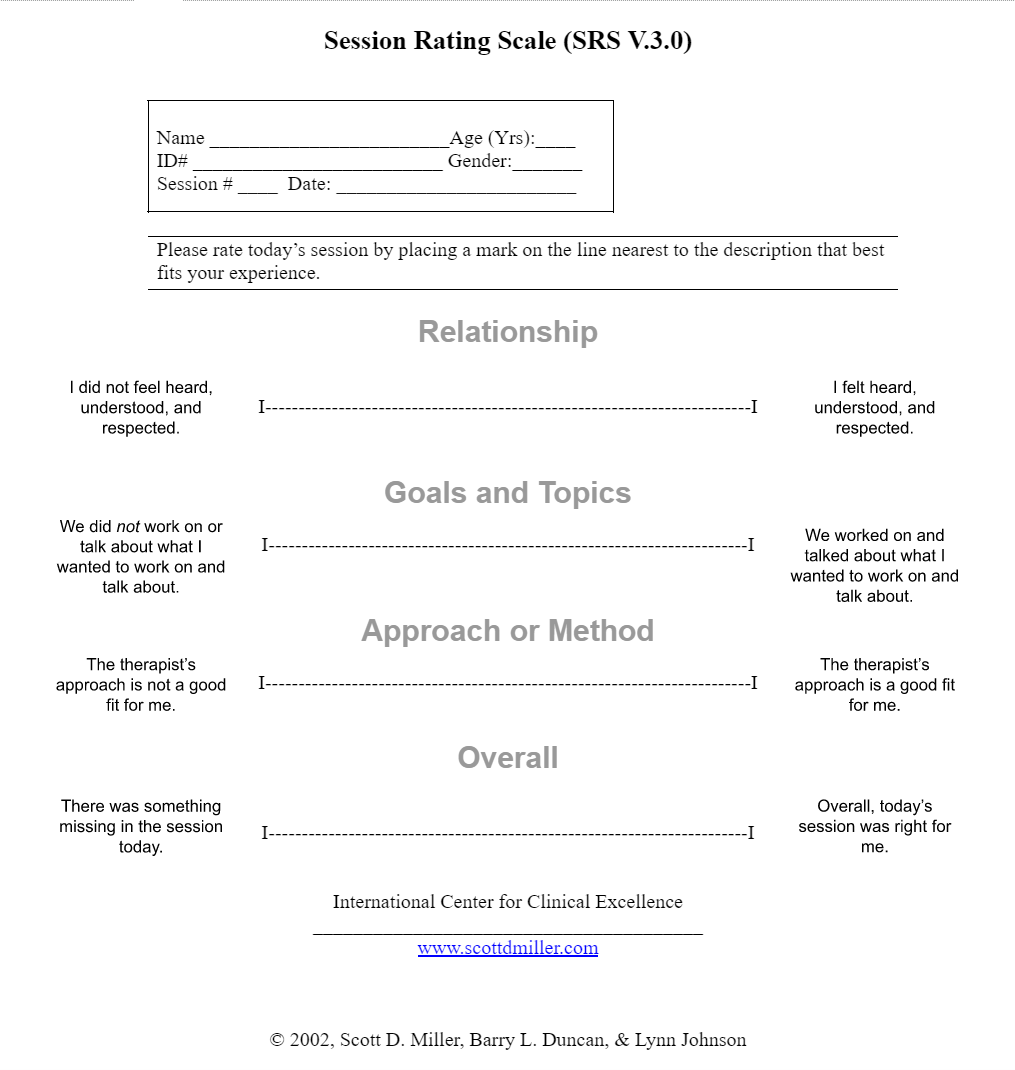

Skip forward to 2009. I was working as a substance abuse counsellor and was fortunate enough to be selected to attend a conference featuring Scott Miller. Scott’s sessions on SuperShrinks changed my counselling practice overnight. I blogged about his sessions in Supershrinks – Part 1 and Part 2. The sessions focused on the power of deliberate practice through the use of Feedback Informed Treatment (FIT) and introduced tools like the Session Rating Scale (SRS) shown below.

The Session Rating Scale (SRS) is just one of many tools used within a Feedback Informed Treatment (FIT) process. There are versions for children, youth, groups, and supervisors. The scaled sheet is given to a client immediately after the counselling session and if the rating is under a certain level it is addressed immediately. It is the counsellor’s job to take responsibility for the tone, progression, and the client’s experience of the session. The scales offered opportunities for immediacy and combined with the Outcomes Rating Scale (ORS) are excellent predictors of client drop out, providing opportunities to keep clients connected and in counselling.

The license to use the scales in paper and pencil format is available for free to individual practitioners. The non-profit I was working with at the time invested in group licences and the software. It was amazing. My clients absolutely loved it. I hated it… at first.

Sessions I thought had gone swimmingly well received the worst feedback. It was very painful but I persevered and got better… And again, my clients loved being asked to provide honest feedback on their experience of the session.

Most importantly it opened up the conversation reminding me to ask questions like, How might we approach this in a way that works better for you? Or, What can I do to make you feel more heard, understood, and respected? Or even, Is there something else we should be focusing on?

Sensemaking

Moving on, I went back to school (again) and earned an Interdisciplinary MA studying both learning and technology, and leadership. During that process I conducted a participatory action research project using… yes, narrative inquiry. During this time I was also working for ThoughtExchange, who were then a tech start-up developing an online platform aimed at privileging people’s voices – so a new kind of narrative inquiry platform.

Concurrently-ish, Cynefin launched their SenseMaker software and I believe Cynthia Kurtz launched her own participatory narrative inquiry software NarraFirma, around this time and under a General Public license.

I think it’s important to note that all sensemaking processes should get you to the point of asking “How do we get more of this (good stuff) and less of that (not good stuff)?”

Leaning Toward Open

After my MA was finally done I began working as an instructional designer for non-profits and in higher education. One position included designing education and training related to Fire & Safety where I was working on internationally accredited programs and shared test banks within an experiential learning framework. This fueled my interest in both experiential learning and authentic assessment.

This combined with the narrative inquiry stuff was rattling around in my head along with feedback informed treatment (which I had extended to feedback informed teaching and designing) and at this point learning transfer had joined the pack. These all influenced how I designed feedback and assessment instruments. I leaned heavily towards open-ended, qualitative questions, asking for examples of experiences when I was unable to use direct assessment of learning. This was not a popular lean, at the time.

I think many of the folks who design all kinds of feedback instruments in all kinds of organisations come from a research background. They approach post (experience) surveys like they would a census. They design primarily quantitative questions, presuming they know the right choices to provide. They default to a 5-point Likert scale because someone told them it provides the most valid results. And because they have, in many cases, years of 5-point scales at hand they can use these to show gains and losses. The problem of course is that these rarely tell you why something is the way it is, or how you might improve.

I won’t go into all the other problems that can haunt surveys such as priming or biassing language, acquiescence bias, neutral bias… It goes on and on…

Smile Sheets

Then a few years ago another piece fell from the sky. Will Thalheimer published Performance-Focused Smile Sheets: A Radical Rethinking of a Dangerous Art Form.

I was already familiar with Will’s work with the CDC and his online presence and was looking forward to this book. Finally, a champion for a different kind of survey to assess learning experiences. As I read the book I was literally yelling “It’s about time!” out loud. (Covid lockdown changed my inside voice to an outside voice.)

Based on Will Thalheimer’s Smile Sheets book I designed a slew of survey questions that could be used in a variety of situations. I also bought Will’s second edition, Performance Focused Learner Surveys and incorporated many of the gems from that book into my recent program evaluations, and course and post event surveys.

I think it’s worth highlighting that most of the learning experiences that take place in organizations are not assessed. If you go to a professional development workshop or take compliance training, your understanding of the content or ability to perform in real life is not tested. Not in an authentic and meaningful way anyways. We tend to treat most learning experiences in organizations as if they are inoculations that work invisibly and either need yearly boosters or go on in perpetuity. As a result we really do need to use questions written in the ways Will promotes and like the one below, to evaluate the effectiveness of programs and courses.

In a recent leadership development program pilot I used Will Thalheimer’s process and survey examples to create formative and summative evaluation surveys and an interview guide. One of the evaluation survey questions – perhaps the only question that really mattered – was:

What types of goal setting and action planning did you do during the program?

Choose the ONE option below that best reflects your experience during this program.

Add your comments or reasons for your choices.

The goals and objectives for the program included a strong focus on transferring the learning into leadership practice. The theory of change assumptions were that by including goal setting and action planning, participants would be more likely to make this transfer into their leadership practice.

This one question provided me with a tremendous amount of actionable feedback and evidence that will support significant changes in the program design and delivery.

Diversity, Equity, and Inclusion

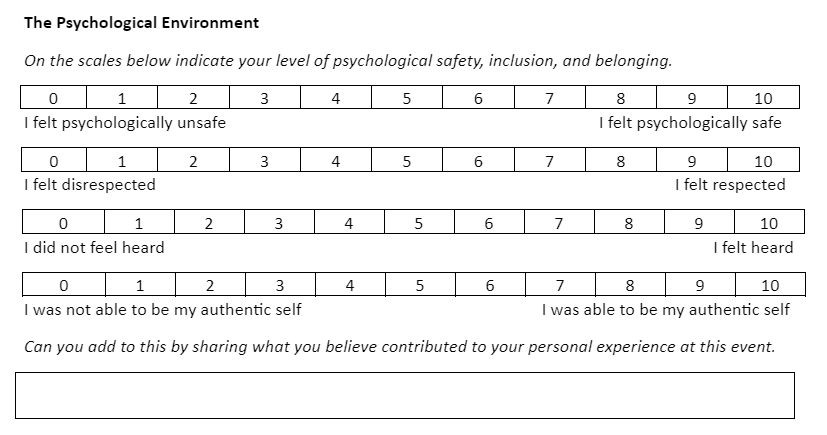

My next really impactful experience – that finally links back to the original Horshack event – was with a Diversity, Equity, and Inclusion task force. I had, whenever able, included questions about respect and safety into post-event surveys. Many of the reasons for this are outlined in this post.

I also reflex to help the folks at the edges. I tend to not design or assess for the middle of the curve. I design and assess for the folks that are struggling, not for the top performers. Top performers need a different kind of support and can be an invaluable source of information, but I don’t design just for them.

I believe it’s more important to know if one person felt like they didn’t belong in a learning experience than it is to know that 10 others did. That one person’s feedback may be actionable and might help me design an experience that allows them, and everyone else, to feel an increased sense of belonging. That matters most.

I don’t want to discount positive experiences. They need to be inquired about in a way that lets us get to the “How do we get more of this and less of that?” questions. And yes, If you’ve done any Appreciative Inquiry work you’ll recognize parts of this.

This was part of an anonymous survey used after a 3-day staff retreat style staff meeting. I like an 11 point scale or a line with no numbers that can be measured after (like the Outcome Rating Scales) for smaller groups. In this case I offered the 0-10 scale but the final version had been changed to a 5 point scale. Research on what works best regarding scale length and presentation, is not conclusive.

In a more perfect world responses under a certain level would have led to Feedback Informed Treatment (FIT) type questions like:

- How might we approach this in a way that works better for you?

- What can we do in the next meeting to allow you to feel more heard, understood, and respected?

- What changes can we make to allow you to feel like you belong?

Survey Analysis

In analyzing surveys that include these kinds of open-ended questions the BIG question should be:

How do we get more of the good stuff (psychological safety, diversity, equity, inclusion, and feeling like you belong are good) and less of that not so good stuff?

I also believe we need to do this with a real focus on what we can do as designers, instructors, facilitators, and leaders.

We can’t control anyone else and a lot of the time we don’t have the positional power to get all the questions that need to be asked into our evaluations or surveys – but we can try.

So, thanks Will, for the great question.

How often does YOUR ORG use ONE or more LEARNER SURVEY questions related to DEI [Diversity, Equity, Inclusion]?

Not nearly enough, but we’re working on it 🙂