Creating promotional videos, heck, any kind of video, can feel like a monumental task, especially when you’re trying to showcase something tech related like an AI workshop. To streamline the process, and to have a bit of a play, I decided to experiment with two AI-powered workflows for creating an AI-augmented, podcast-style promotional video. Both workflows and their respective AI tools had their strengths and challenges, and the end results were pretty interesting imo. Here’s how each workflow unfolded and what I learned along the way.

Workflow 1: NotebookLM + HeyGen

For the first workflow, I leaned on NotebookLM to generate the audio for the podcast. This workflow was more detailed but ultimately delivered a more refined result. The goal was to produce a short, 3-4 minute – engaging video featuring two coworkers discussing the upcoming workshop.

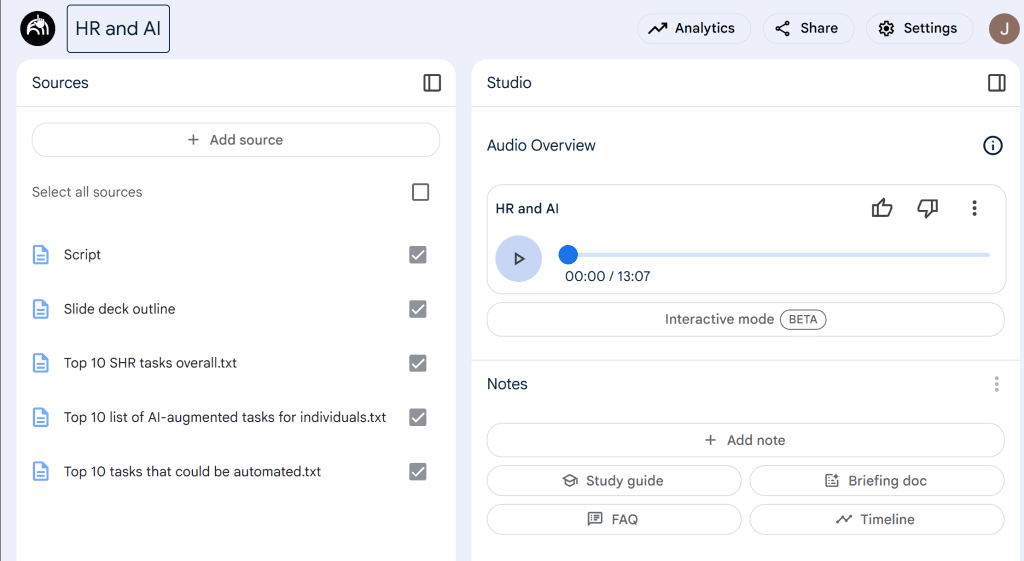

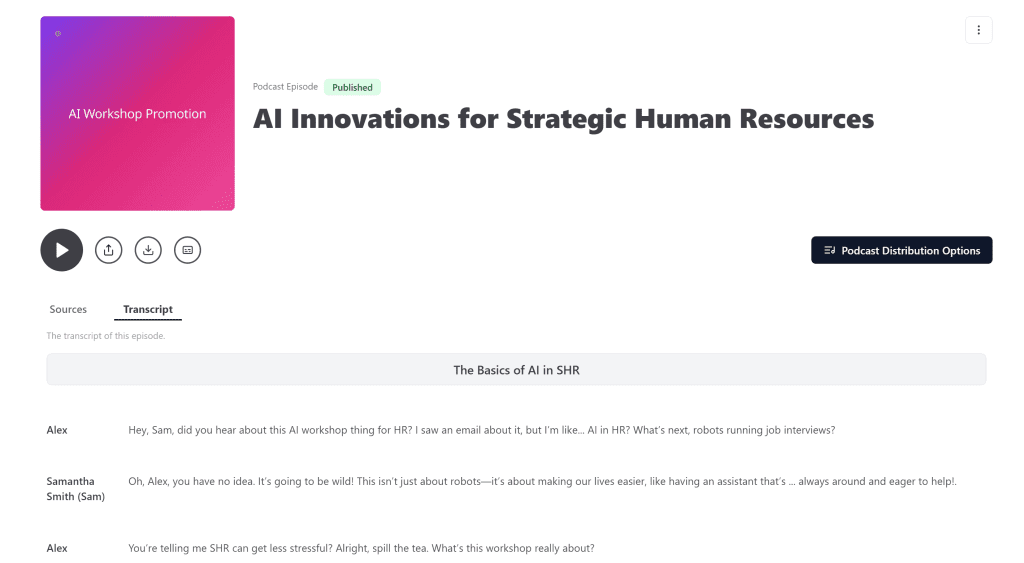

1. Script refinement and context building: ChatGPT helped me draft customization prompts for NotebookLM, and I uploaded the workshop outline and a sample script to provide context. NotebookLM’s ability to create a podcast with natural sounding voices and banter are unrivaled, as of this writing.

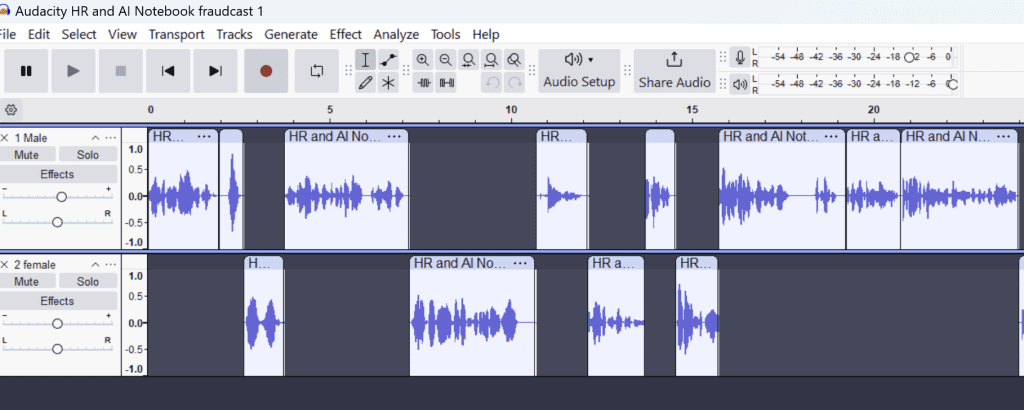

2. Audio generation and editing: NotebookLM produced the audio for the podcast (which my colleague, Kimberly Dunn, has accurately dubbed a “fraudcast”). I downloaded the audio and used Audacity (a free audio editor)) to edit the single audio track into two separate tracks for the two hosts. This step allowed me to refine the timing, cut down the runtime to under 5 minutes, and remove some less-than-perfect moments (goodbye, awkward and other-worldly sounding giggles and guffaws). I exported the edited audacity files as two separate MP3s.

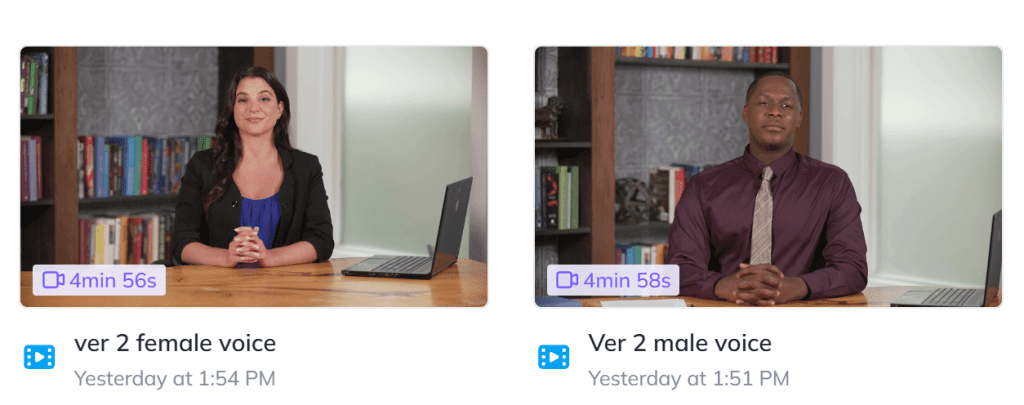

3. Video avatars: HeyGen was used to create avatars for each host. I have a paid subscription but you could do this with the free version. I uploaded each MP3 to the appropriate avatar to create a video. To be clear. I had 2 separate videos at this point. One for the female host with just her audio and the same for the male host. HeyGen did a pretty good job of synching the avatars to the voices. Minimal uncanny valley 🙂

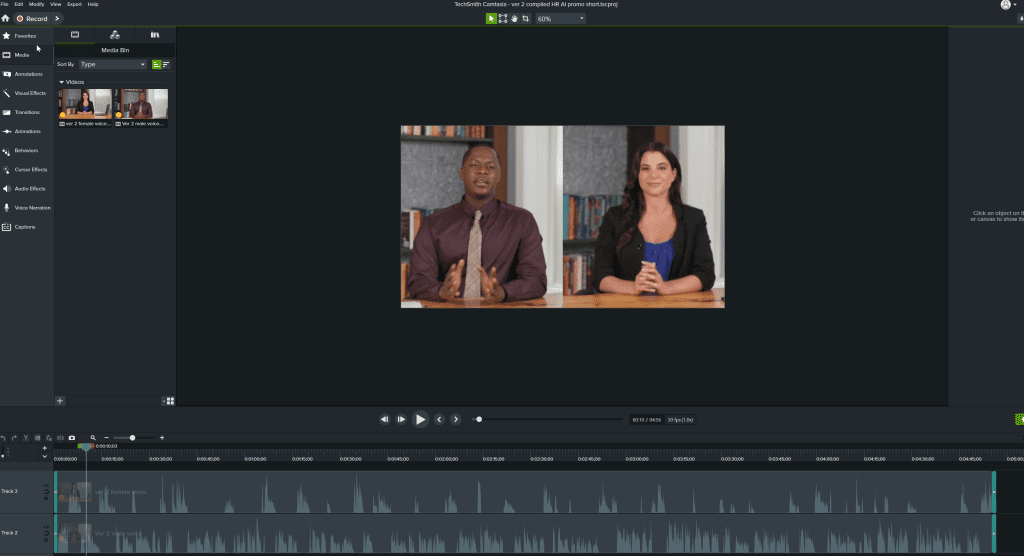

4. Final edits: Camtasia brought it all together. I imported both HeyGen videos into Camtasia and joined them together in an export to MP4 which I uploaded to YouTube. The audio quality and natural tone from NotebookLM elevated the final video.

Key takeaway: While this workflow was more time-intensive, it resulted in, I think, a higher-quality video. NotebookLM’s voices were more human-like, and taking the time to edit the audio with Audacity helped to produce a more polished end product. I also think I can make this a shorter process next time. Much of my time was spent re-learning how to use Audacity :-O

Total time 5 hours.

Here’s the video.

Workflow 2: Jellypod + HeyGen

For the second workflow, I combined Jellypod and HeyGen to create a video podcast. The goal was again to produce a short, 3-4 minute, engaging video featuring two coworkers discussing the upcoming workshop. Here’s how I approached it:

1. Script creation: I used ChatGPT to write the script. The script featured two coworkers having a casual conversation about the workshop, complete with essential details like the date and time. This approach allowed for a friendly and relatable tone while keeping the runtime to my target of 3-4 minutes and including the exact details that I wanted included. This is difficult to do with NotebookLM.

2. Voice creation: Jellypod was used to generate the custom host voices. Initially, Jellypod created narration based on the description I provided, but I opted not to use the generated script. Instead, I replaced it with the ChatGPT script to ensure accuracy and brevity. You can do this with the free plan as long as you don’t go over your credits. I downloaded the audio as a single MP3 file.

3. Video avatars: Next I went to HeyGen and created host 1 as an avatar. Then uploaded the Jellypod MP3 file to avatar 1. Then I downloaded that video so I could edit it in Camtasia. Then I did the same thing for avatar 2. HeyGen did a great job synching the narration with the avatars’ lip movements, resulting in a polished, if not very expressive, visual.

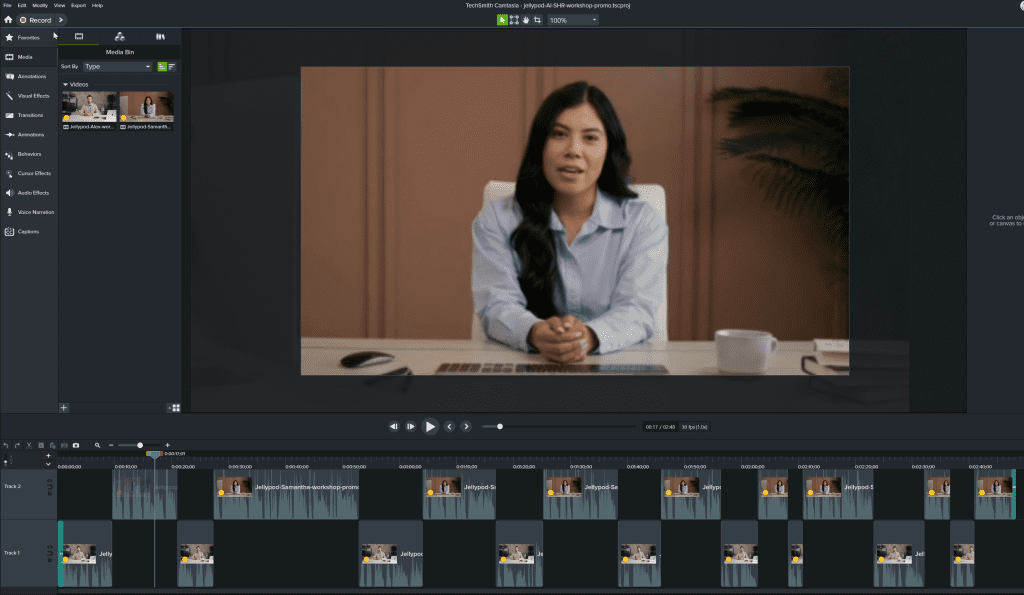

4. Editing: Using Camtasia, I combined the two HeyGen videos into one seamless podcast-style video. The editing process was quick and intuitive. However, the voices generated by Jellypod weren’t as natural-sounding as those from other tools like NotebookLM, which affected the overall quality. I also uploaded this to YouTube.

Key takeaway: This workflow was efficient and straightforward, making it ideal for quick-turnaround projects. The voice quality was pretty good but there is a lot of room to improve the level of expression in the audio and in the avatar’s facial expressions.

Total time 2.5 hours.

Here’s the video.

Final thoughts

Both workflows had their pros and cons. The NotebookLM + HeyGen workflow took longer but produced, I think, a more engaging video. On the other hand, the Jellypod + HeyGen combo was quick and easy, perfect for when time is short.

If I had to choose one for future projects, it would depend on the priority: speed or quality. For projects with tight deadlines, Jellypod would be my go-to. But for high-stakes projects where audio quality matters most, I’d invest the extra time in the NotebookLM workflow.

One lesson I’ve been slowly learning is that using just one AI tool will probably not do. A few AI apps try to be like the swiss army knife of AI but they mostly fall short. Granted ChatGPT, in my opinion, is pretty close to being a multiuse tool that works fairly well in almost all situations. It’s definitely my most reached-for tool, especially with Canvas and now Tasks. Overall however, I’m really interested in filling my own AI toolbox with a variety of tools that are suitable for specific projects.

Have you experimented with AI tools for video creation? I’d love to hear about your workflows and a peek into your toolbox!

Post edited with ChatGPT Canvas

Cross posted to LinkedIn