Every few weeks I hear someone say, or post on social media, that AI is in a bubble and it’s bound to burst soon. The tone is usually confident and almost always a little disconnected from what the people building frontier models are seeing day to day. (Frontier models are at the bleeding edge of AI development and maybe not available to the general public, yet.) If you’re not working in AI, at or near that level, it’s easy to get swept up in all kinds of conjecture and noise.

The folks closest to the work are not seeing a bubble. What they are seeing is exponential progress. The kind of progress that is steady, reliable, and somewhat scary depending on the situation and your perspective.

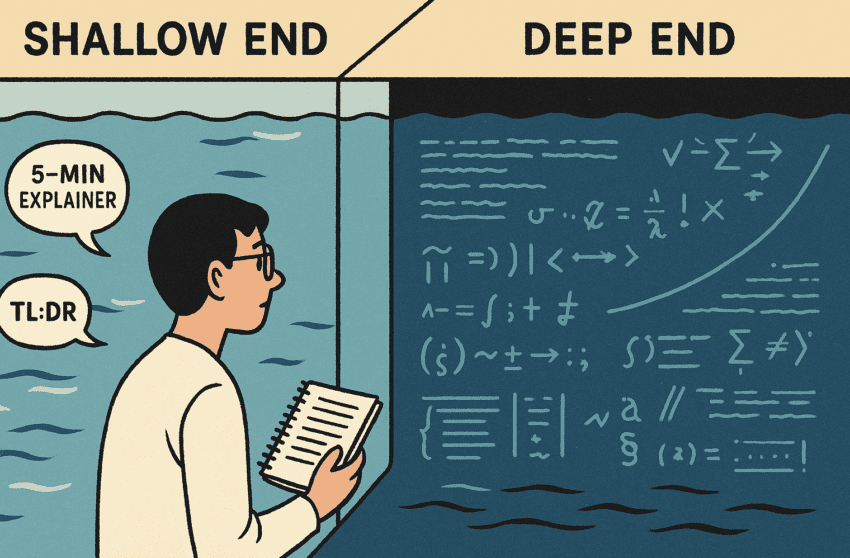

Fortunately we don’t need an advanced degree in math, computer science or even a free weekend to follow what’s happening. We can use AI itself to turn dense material into something anyone can understand, in minutes.

That’s what I want to show you…While we also look at what the folks on the edges are saying about the AI bubble. ‘Cos… why not multitask in a blog post.

Starting at the deep end: Julian’s post

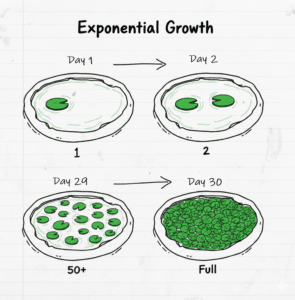

Julian Schrittwieser (now at Anthropic, previously at DeepMind working on AlphaGo Zero and MuZero) published a piece called Failing to Understand the Exponential, Again. It’s the kind of article that lives at the deep end of the complexity pool. It has charts, scaling curves, and a sobering reminder that exponential growth doesn’t look dramatic until right before the big bend in the curve.

For example, think about how compounding interest works, or better yet, lily pads in a pond. It barely moves for years, then suddenly it snowballs. The math is the same. Small, consistent gains look small or insignificant, until they don’t.

This isn’t guesswork or vibes. Julian is looking at hard data like performance curves that keep climbing across different models and over many years.

His conservative forecast looks like this:

- By mid-2026, models will be able to work autonomously for eight hours at a time.

- Before the end of 2026, at least one model will match human experts across many industries.

- By the end of 2027, outperforming experts will be common, not surprising.

To people working on frontier models, this isn’t sci-fi. It’s the straightforward continuation of the trends they see every day.

So no, “bubble” is not the word they’re using.

Moving toward the surface: the podcast version

If Julian’s blog is the deep dive, the next step toward the shallower end is his interview on the MAD Podcast with Matt Turck. It’s an hour long, still a bit technical, but conversational and easier to follow. He explains where these trends come from and why the pace of progress feels so strange from the outside.

The core idea is simple. Exponential curves are notoriously hard for humans to reason about. We tend to be linear thinkers but we are now living in an exponential moment. That mismatch fuels the misunderstanding that I’m hearing in conversations about AI. It’s also contributing to the idea that there must be a bubble.

A 20-minute shortcut: Nate’s summary

Then you have Nate B. Jones’ video, which compresses the whole discussion into a quick, energetic 20-minute explainer. Keeping the metaphor going, It’s the place in the pool where you can reach the bottom on your tip toes. He pulls out the essential points:

- The “AI bubble” narrative doesn’t match what leading researchers are seeing.

- The scaling laws that got us here are still working.

- Slowdown isn’t the pattern, acceleration is.

It’s still a bit technical, but reachable, and really nails the key takeaways. But this could be still a bit of a struggle for those completely new to tech or AI. Not everyone knows what scaling laws are. I mean why would you?

A five-minute explanation using AI itself

To show what’s possible when you use AI as a learning tool, I fed the 60+ minute podcast into NotebookLM and turned it into a five-minute explainer video.

No jargon. No complicated graphs. No need for background knowledge. This is the shallow end of the pool where it’s nice and comfy, and easy to get into.

It just focuses on what the experts are saying, why the “AI bubble” narrative doesn’t hold up, and what to watch over the next few years.

And here’s the prompt I used in NotebookLM:

Using just the selected resource, summarize and explain the key points for a novice audience that knows very little about AI. A 14 year old should be able to understand the video you create. Use metaphors and analogies when appropriate and helpful.

This part is important. You don’t have to rely on second-hand takes (like mine), viral threads, or opinion pieces that flatten the whole field into vibe-based headlines. You can, all on your own, use AI to digest the raw material and get a clear, reliable explanation in whatever format you prefer, including text, audio, slides, or video.

That shift alone changes who gets to participate in these conversations. It kicks the door wide open. All you have to do is saunter through.

Why this matters now

If you assume we’re in a bubble, you make bubble-shaped decisions. This can result in delayed adoption, ignoring or waiting on skill development, putting governance on hold or continuing at a snail’s pace while waiting for the bubble to burst. Believing we are in an AI bubble suggests that we have time, that we can slow our roll because the whole thing will settle down soon. I think that’s a dangerous perspective.

If however we assume we’re in a period of rapid, exponential growth – because that’s what the data shows – then the decisions look very different.

- We prioritize learning.

- We adapt early.

- We plan for impact across every role, sector, and skill level.

- We build guardrails now, not “once things slow down.”

These choices matter. Waiting for things to “calm down” is a risk. May be too big of a risk, depending on who, and how many, our decisions impact.

Bringing it all together

The conversation about AI doesn’t need to be mysterious or exclusive. You can start with the original complex sources, move through expert summaries, and end with customized explanations made by AI for you.

That’s the real story here. We are experiencing exponential progress in the technology and, if you choose to use the tech, exponential progress in how we can understand it.

If you want to explore this yourself, here are the pieces in order of complexity:

- Julian Schrittwieser’s original post

- The hour-long MAD Podcast interview

- Nate B. Jones’ 20-minute summary

- A five-minute NotebookLM explainer

Each step builds on the last. Each step makes the ideas more accessible.

And each step reinforces the same message. This isn’t a bubble, it’s a curve. And we are quickly approaching the part where the line starts bending real fast.

Post co-written with AI – mostly ChatGPT but parts were edited with Claude and then re-edited by me and then thrown back into ChatGPT and of course there is pinch of Gemini and NotebookLM in there… and cross posted to LinkedIn.